Not long ago, automation meant a robot inside a cage, repeating the same motion for years. Modern factories and warehouses, however, are messy environments: parts change, lighting varies, and humans share the floor. Solving that reality requires more than faster motors or smarter code. Robots need bodies that can sense, reason, and act together. That practical union of perception, decision, and execution is what we call embodied intelligence—systems that learn from interaction and adapt in the real world.

What Is Embodied Intelligence?

Put simply, embodied intelligence is the tight feedback loop between perception (the “eye”), decision (the “brain”), and execution (the “hand”). A robot perceives an object, forms a plan, reaches for it, evaluates the result, and uses that feedback to improve next time. Unlike systems that treat perception, planning, and motion as separate tasks, embodied systems use the robot’s body as part of the thinking process: movement creates data, and that data improves movement.

Two trends made this practical:

1. Sensors and compute have leapt ahead—high-quality 3D cameras, force sensors, and capable edge processors are now widely available.

2. AI has progressed from narrow pattern recognition to multimodal large models that can reason across vision, language, and touch.

Together, these advances enable robots to generalize across new parts and environments instead of failing when reality deviates from a scripted scenario.

The Three Core Pillars

Three core technologies turn the concept of embodied intelligence into working systems:

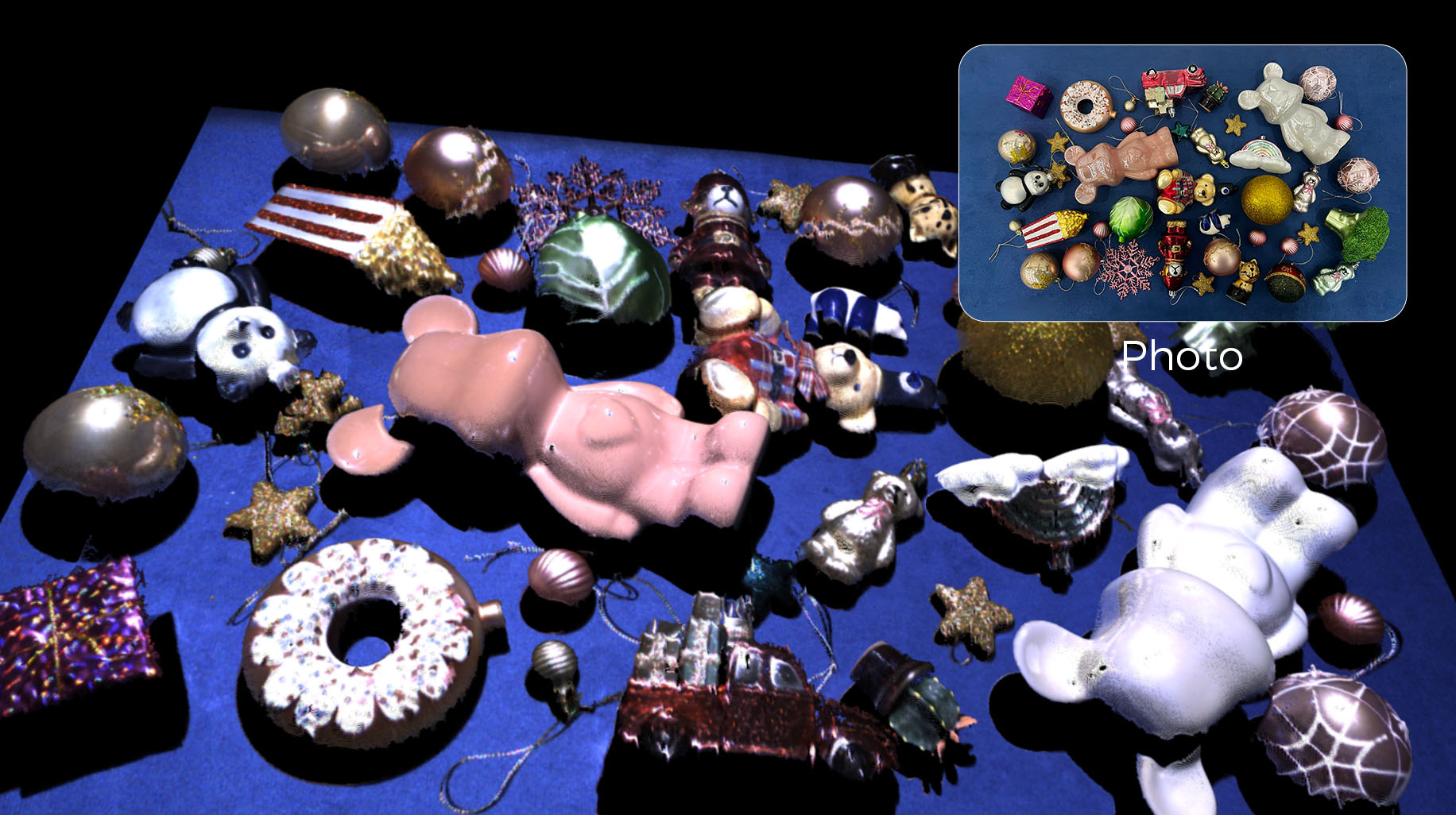

3D perception: Depth sensors and point clouds give robots geometric awareness—not just how objects look, but how they occupy space. This matters for occlusions, orientation, and identifying reliable grasp points.

High-precision point clouds of diverse real goods

Multimodal intelligence: Systems that integrate visual input, tactile feedback, and simple language instructions can learn from demonstrations, accept human guidance, and adapt in real time—enabling the leap from “I see an object” to “I know how to grasp it and what to do next.”

Mech-Mind’s AI software suites

Dexterous manipulation: Multi-axis grippers and articulated hands let robots handle irregular shapes, reorient objects in-hand, and perform tasks that once required human dexterity or custom fixtures.

Mech-Hand Five-Finger Dexterous Hand

Why It Matters to Industry

For operations teams, embodied intelligence changes the economics of automation. Instead of building one-off units for a single SKU, companies can deploy adaptable workstations that switch between tasks with minimal downtime. That flexibility shortens commissioning, reduces retooling costs, and spreads integration effort across more use cases.

On the production floor the benefits are clear: fewer manual fixes, faster changeovers, and higher first-pass success rates. For business leaders, that means greater throughput and lower operational risk. In short, embodied intelligence makes automation far more resilient to the variability that defines real production and logistics environments.

Mech-Mind’s General-Purpose Robot “Eye-Brain-Hand”

Turning research into reliable equipment is an engineering challenge. Sensors must be calibrated, perception must withstand poor lighting and clutter, control loops must run in real time, and end-effectors must be rugged for daily use. Mech-Mind addresses these challenges by integrating three layers—Mech-Eye (3D vision), Mech-GPT (AI brain), and Mech-Hand (dexterous hand)—into a general-purpose portfolio.

What makes this approach practical is engineering discipline rather than marketing. Standardized interfaces, extensive real-world data for model training, and repeatable testing ensure that each layer complements the others. The result is a platform that can be applied across depalletizing, bin-picking, inspection, and light assembly—not by rebuilding mechanics, but by updating perception models and workflows.

Mech-Mind Embodied Intelligence “Eye-Brain-Hand” Robot Station

Mech-Mind Embodied Intelligence “Eye-Brain-Hand” Robot Station

Independently developed by Mech-Mind, the embodied intelligence “Eye-Brain-Hand” robot station features strong generalization. It can quickly understand linguistic instructions and perform various complex tasks. Based on extensive data, it can identify and manipulate a variety of common objects. This one-stop station is easy to operate without extra programming. With high interactivity, it supports multiple input ways like voice and text, and is ideal for research, education, and exhibitions.

Looking to the Future

Embodied intelligence reframes robots as partners that perceive, reason, and act in a shared world. In the coming years we’ll see machines that learn new tasks from demonstrations, operate safely alongside people, and transition between roles with far less reengineering. Companies that adopt integrated stacks—robust 3D cameras, adaptive AI software suites, and dexterous hands—will realize that future sooner.

Mech-Mind’s general-purpose robot “Eye–Brain–Hand” approach is one practical path: a grounded, data-driven stack that converts research concepts into industrial value. If you’re considering embodied intelligence for your operation, start with partners who design products for production realities.

True intelligence emerges only when a system can perceive the world and respond—and that is exactly where modern robotics is headed.